We almost didn't even make this trip. We had seen it before, and the eclipse was scheduled for a very inconvenient time for us.

The original plan was to drive to Oklahoma and stay with family. Their place in Oklahoma isn't in the path, but was close. So the plan was to drive from there to whatever part of the path that had the best combination of closeness and good weather. We had penciled in Dallas, since that was the closest spot on the path that would have good historical weather. The history showed that further south was more likely to be cloud-free.

As it happens, the weather was very different from the average. New England was clear, while Texas was cloudy with danger of severe storms. If we had gone with this plan, we probably would have headed towards Arkansas.

For various reasons, we didn't do that plan. For a while, we had decided to skip it altogether. However, another family event with different family came up. They live in Indianapolis, and the other event was the Friday before the eclipse on Monday. So our plan changed once again to driving to Indiana, then judging the weather there. We were willing to drive wherever we needed, but Indianapolis is already in the path so we were hoping for good weather there.

Three weeks ago, the longest-range forecast was excellent for Indiana, so we decided to go there. Ten days ago, the forecast was about the worst imaginable, with a frontal weather system almost perfectly along the path, cloudy from coast to coast. Since then, the forecast improved, with Indianapolis having a cloud cover of between 6% and 50% depending on the model and time out.

As late as yesterday I was still contemplating driving to Maine, but we couldn't have taken all the family. We decided to stay here and take our chances. If it was cloudy, we would just see the clouds get dark.

The weather report on Monday morning wasn't great. The front had passed overnight, but there were still lots of cirrus clouds, and I was worried.

As the eclipse started, the sky was still full of cirrus clouds. It wasn't affecting visibility of the partial phase. As usual, the eclipse was only barely noticeable until it got over 90%.

The "party" as such was set up in the back yard, with a table and a bunch of lawn chairs. The rest of the party played cards while I did my usual anti-social thing.

We made a pinhole camera with a cardboard box, foil, paper, and a glue stick. It worked fine, projecting an image maybe a couple of millimeters in diameter but clearly with a bite out of it. We also had a full complement of eclipse glasses and one solar binoculars.

With the binoculars, I could see one sunspot near the center of the sun, maybe at 9:00.

The soundtrack for the partial phase is "There's a little black spot on the Sun today"

The most interesting thing i remember from the partial phase of the 2017 eclipse was the crescent shadows cast by the trees. There were no trees in the back yard. The family had a number of kids, and I shouted out if anyone wanted to come out front with me to look for something interesting. There were several trees out front, so I did see the crescent shadows, but it also meant I had my wife and all of the family's kids out front with me during totality.

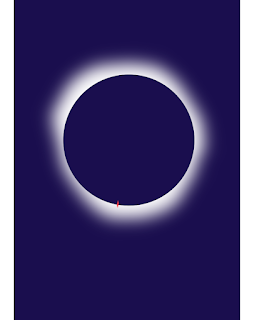

The memorable thing this time was that totality looked like a hole in the sky, like a portal. "There's a hole in the sky, through which things can fly." The eclipse itself was silent of course, but the soundtrack in my head was the sound of the "whale probe" from Star Trek 4.

Also there was a clear red spark at about 6:30. This turned out to be a prominence. I only remember seeing it after a minute or so, but everyone around me saw it too.

The moon was the same color as the sky, and the corona was white. During the 2017 eclipse, there were several long corona streamers. I didn't see anything like that this time -- the corona was a relatively thin band, with a definite sharp inner edge and a fade-out on the outer edge, but still pretty thin. The sketch above is about what I saw, and a couple of my party agree it looked like that.

The sky in general was dark like twilight. I don't remember exactly how dim it got last time, but it might have been dimmer this time.

Totality was long enough for me to get a video of my party, a couple of telephoto images through my camera, and still experience the event fully with my Mk1 eyeballs.

I did see the diamond ring at third contact, for about 2 seconds before glasses on. I could still see the corona, but along with an overwhelmingly bright patch of sun. The corona was still visible on the opposite side.